We’ve all been there, reading something online that makes our blood boil, ignites our passion for justice and a desire to make things right. If you are not familiar with such feelings spend some time on Twitter and you soon will. Religion, politics, vaccines, sexuality, racism, all can trigger a variety of emotions. But why do we get so angry when discussing these moral topics? How do we formulate our personal moral viewpoint? And how can a deeper understanding of morality help us all get along?

In Jonathan Haidt’s book The Righteous Mind, he introduces his research on Moral Foundations Theory which sheds light on the division we see around us today. Below is a summary of the key messages from his book. I hope that by reading this you will better understand your own moral framework, and as you do, gain some compassionate understanding of people who have an opposing view to your own.

This Book in a Paragraph

Our morality is first intuited, then secondly reasoned for. We feel things are wrong then utilise our reason and logic to justify said feelings. These moral intuitions can be described as moral “tastebuds”. Each person has a different taste palette for what is right and wrong, influenced by our biology, upbringing and society. Your personal moral palette Haidt calls your “Moral Matrix”. Understanding the six tastebuds can help you understand why you might want to fight with someone about politics or religion, as our rational mind tries to justify our moral position. Understanding the six tastebuds can also help us empathise with people with completely different matrices to our own.

So the next time you find yourself seated beside someone from another matrix, give it a try. Don’t just jump right in. Don’t bring up morality until you’ve found a few points of commonality or in some other way established a bit of trust. And when you do bring up issues of morality, try to start with some praise, or with a sincere expression of interest. We’re all stuck here for a while, so let’s try to work it out.”

Intuitions Come First, Strategic Reasoning Second

Would you sign a piece of paper which said “I _________, hereby sell my soul, after death, to Scott Murphy, for the sum of $2”

Would you drink juice that had had a sterilized and cleaned cockroach dunked in it?

Is it wrong for first cousins to get married?

Why? Why not?

Haidt’s quotes his own and other psychologists research on the field of moral reasoning and comes to the conclusion that judgement and justification for those judgements are separate processes. He writes:

“The roach juice and soul-selling dilemmas instantly make people “see that” they want to refuse, but they don’t feel much conversational pressure to offer reasons. Not wanting to drink roach-tainted juice isn’t a moral judgment, it’s a personal preference. Saying “Because I don’t want to” is a perfectly acceptable justification for one’s subjective preferences. Yet moral judgments are not subjective statements; they are claims that somebody did something wrong. I can’t call for the community to punish you simply because I don’t like what you’re doing. I have to point to something outside of my own preferences, and that pointing is our moral reasoning. We do moral reasoning not to reconstruct the actual reasons why we ourselves came to a judgment; we reason to find the best possible reasons why somebody else ought to join us in our judgment.“

How to Win an Argument

Haidt presents the social intuitionist model which diagrammatically shows how we intuit our morality before we can provide reasoning for it. As well as how interactions with other people can shape our own judgements.

Moral and political arguments can be so frustrating because both sides try to outreason one another (Combat A’s Reasoning with B’s Reasoning) on topics that are primarily intuited. Change happens not when we try to dispute someone else’s reasoning, but when we understand deeply and appeal to the original intuitions they are having (Links 3 and 4).

“If you really want to change someone’s mind on a moral or political matter, you’ll need to see things from that person’s angle as well as your own. And if you do truly see it the other person’s way—deeply and intuitively—you might even find your own mind opening in response. Empathy is an antidote to righteousness, although it’s very difficult to empathize across a moral divide.”

The Elephant and The Rider

The two systems in our brain are like an elephant and an elephant rider. Our elephant is our quick, automatic, emotional system and our rider is our thinking and reasoning slower system. In any battle between the two our elephant usually wins out and we will find ways to justify our emotions about a topic (even when our logic is absurd).

“These responses are instinctive and almost instant, they are more akin to judgements animals make as they move through the world, feeling themselves drawn toward or away from various things. Moral Judgement is mostly done by the elephant.”

“Therefore, if you want to change someone’s mind about a moral or political issue, talk to the elephant first. If you ask people to believe something that violates their intuitions, they will devote their efforts to finding an escape hatch – a reason to doubt your argument or conclusion. They will almost always succeed.“

The Lies We Can Justify

Give our conscious reasoning side the motivation and it can justify almost any behaviour. Like a press secretary who automatically justifies any poor position taken by the president, we can lie cheat and steal and convince ourselves we are doing what we are doing for the right reasons. Our reasoning can blind us to the truth and be a tool for great evils.

“We often ask ourselves “Can I believe it” when we want to believe something, but “Must I believe it” when we don’t want to believe. The answer is almost always yes to the first question and no to the second.”

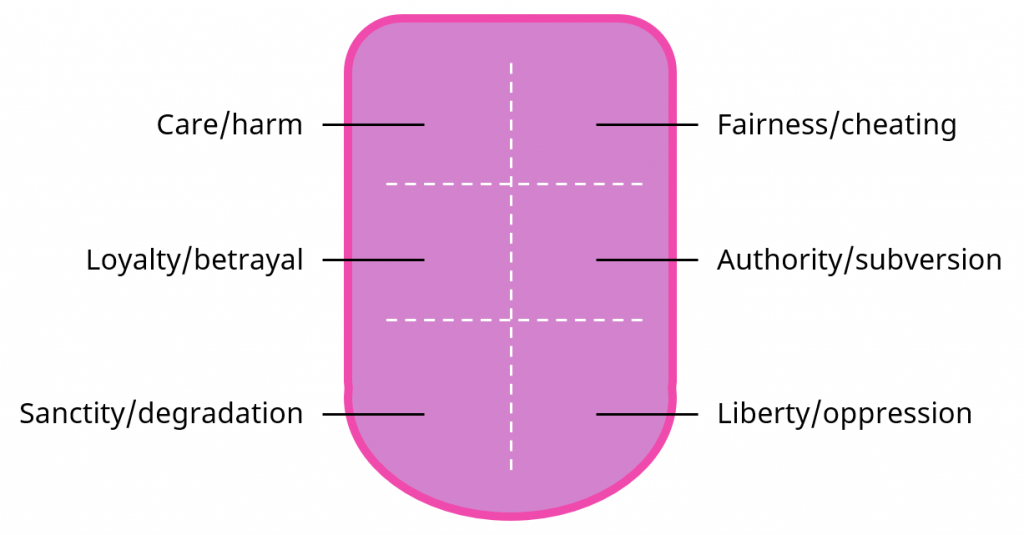

The Righteous Mind is Like a Tongue With Six Taste Receptors

Our moral intuitions take many forms. Haidt’s cross-cultural research unearthed six moral foundations. We can describe these foundations as moral “tastebuds” with each person having a different taste palette for what is right and wrong, influenced by our biology, upbringing and society. Haidt calls your personal moral palette your “Moral Matrix”, because like the movie The Matrix we live within our moral framework and sometimes believe that’s all that exists in the world. Equally, in the movie (as well as many religions), they convey the message of waking up and seeing the world through a new broader perspective.

I began to see that many moral matrices coexist within each nation. Each matrix provides a complete, unified, and emotionally compelling worldview, easily justified by observable evidence and nearly impregnable to attack by arguments from outsiders.”

The Six Moral Tastebuds

Care/harm foundation: evolved in response to the adaptive challenge of caring for vulnerable children. It makes us sensitive to signs of suffering and need; it makes us despise cruelty and want to care for those who are suffering.

Example triggers: Cute puppies and kittens

Fairness/cheating foundation: evolved in response to the adaptive challenge of reaping the rewards of cooperation without getting exploited. It makes us sensitive to indications that another person is likely to be a good (or bad) partner for collaboration and reciprocal altruism. It makes us want to see cheaters punished and good citizens rewarded in proportion to their deeds.

Example triggers: Marital fidelity, broken vending machines

Loyalty/betrayal foundation: evolved in response to the adaptive challenge of forming and maintaining coalitions. It makes us sensitive to signs that another person is (or is not) a team player. It makes us trust and reward such people, and it makes us want to hurt, ostracize, or even kill those who betray us or our group.

Example triggers: Sports teams, nations

Authority/subversion foundation: evolved in response to the adaptive challenge of forging relationships that will benefit us within social hierarchies. It makes us sensitive to signs of rank or status, and to signs that other people are (or are not) behaving properly, given their position.

Example triggers: Bosses, respected professionals

Sanctity/degradation foundation: evolved initially in response to the adaptive challenge of the omnivore’s dilemma, and then to the broader challenge of living in a world of pathogens and parasites. It includes the behavioral immune system, which can make us wary of a diverse array of symbolic objects and threats. It makes it possible for people to invest objects with irrational and extreme values—both positive and negative—which are important for binding groups together (for example a flag or a cross).

Example triggers: Immigration, deviant sexuality

Liberty/oppression foundation: evolved to respond to the tendency of some heriachies to fall into tyranny. Makes people notice and resent any sign of attempted domination. It triggers an urge to band together to resist or overthrow bullies and tyrants. This foundation supports the egalitarianism and antiauthoritarianism of the left, as well as the don’t-tread-on-me and give-me-liberty antigovernment anger of libertarians and some conservatives.

Example triggers: Vaccine mandates, authoritarianism

Moral Foundations and Political Viewpoints

Haidt found he was able to predict an individual’s political leaning based on their moral matrix:

“Liberals have a three-foundation morality, whereas conservatives use all six. Liberal moral matrices rest on the Care/harm, Liberty/oppression, and Fairness/cheating foundations, although liberals are often willing to trade away fairness (as proportionality) when it conflicts with compassion or with their desire to fight oppression. Conservative morality rests on all six foundations, although conservatives are more willing than liberals to sacrifice Care and let some people get hurt in order to achieve their many other moral objectives.“

The Liberal Blind Spot

Haidt showed how this three foundation matrix creates a blind spot where strong liberals may fail to understand that conservatives do still have care and fairness as part of their moral matrix. They may also act hastily to change moral systems, which serve essential functions for many people. Haidt writes:

“In a study I did with Jesse Graham and Brian Nosek, we tested how well liberals and conservatives could understand each other. We asked more than two thousand American visitors to fill out the Moral Foundations Questionnaire. One-third of the time they were asked to fill it out normally, answering as themselves. One-third of the time they were asked to fill it out as they think a “typical liberal” would respond. One-third of the time they were asked to fill it out as a “typical conservative” would respond. This design allowed us to examine the stereotypes that each side held about the other. More important, it allowed us to assess how accurate they were by comparing people’s expectations about “typical” partisans to the actual responses from partisans on the left and the right. Who was best able to pretend to be the other? The results were clear and consistent. Moderates and conservatives were most accurate in their predictions, whether they were pretending to be liberals or conservatives. Liberals were the least accurate, especially those who described themselves as “very liberal.” The biggest errors in the whole study came when liberals answered the Care and Fairness questions while pretending to be conservatives. When faced with questions such as “One of the worst things a person could do is hurt a defenseless animal” or “Justice is the most important requirement for a society,” liberals assumed that conservatives would disagree. If you have a moral matrix built primarily on intuitions about care and fairness (as equality)… what else could you think?”

“Nonetheless, if you are trying to change an organization or a society and you do not consider the effects of your changes on moral capital, you’re asking for trouble. This, I believe, is the fundamental blind spot of the left… Conversely, while conservatives do a better job of preserving moral capital, they often fail to notice certain classes of victims, fail to limit the predations of certain powerful interests, and fail to see the need to change or update institutions as times change.”

I recommend reading George Orwell’s “The Road to Wigan Pier” for more information on this topic.

Morality binds and blinds

Whilst evolution selects mostly for selfish behaviours, Haidt makes the case that at least some of our development had to have come from actions that protected the group. He says we are 90% selfish chimp and 10% groupish bee.

My hypothesis… is that human beings are conditional hive creatures. We have the ability (under special conditions) to transcend self-interest and lose ourselves (temporarily and ecstatically) in something larger than ourselves. That ability is what I’m calling the hive switch. The hive switch, I propose, is a group-related adaptation that can only be explained “by a theory of between-group selection,” as Williams said. It cannot be explained by selection at the individual level. (How would this strange ability help a person to outcompete his neighbours in the same group?) The hive switch is an adaptation for making groups more cohesive, and therefore more successful in competition with other groups.“

Our bee nature does not connect us with just anyone around us. An important note here is that our ability to feel connected and group up with others is selective. We are more likely to feel the emotions and feelings of those we feel share our moral viewpoint (are in the “in” group). Haidt describes an experiment where a person who acts with fairness in the context of a game is shocked, compared to when the selfish person was shocked…

The subjects used their mirror neurons, empathized, and felt the other’s pain. But when the selfish player got a shock, people showed less empathy, and some even showed neural evidence of pleasure. In other words, people don’t just blindly empathize; they don’t sync up with everyone they see. We are conditional hive creatures. We are more likely to mirror and then empathize with others when they have conformed to our moral matrix than when they have violated it.“

Applying our Groupish Tendencies to Organisations

Our awareness of the Moral Foundation’s Theory and peoples bee-like nature can help us make organisations that bond people together closely so they are all “singing from the same hymnbook” as the saying goes. Below are three tips Haidt gives to apply our groupish tendencies to the world of business.

Increase similarity, not diversity: “To make a human hive, you want to make everyone feel like a family. So don’t call attention to racial and ethnic differences; make them less relevant by ramping up similarity and celebrating the group’s shared values and common identity. A great deal of research in social psychology shows that people are warmer and more trusting toward people who look like them, dress like them, talk like them, or even just share their first name or birthday. There’s nothing special about race. You can make people care less about race by drowning race differences in a sea of similarities, shared goals, and mutual interdependencies.“

Exploit synchrony: Find reasons to move, march, sing, dance, and exercise together. Individuals who move in sync are saying “We are one, we are a team”.

Creates healthy competition amongst teams, not individuals: “Studies show that intergroup competition increases love of the in-group far more than it increases dislike of the out-group. Intergroup competitions, such as friendly rivalries between corporate divisions, or intramural sports competitions, should have a net positive effect on hivishness and social capital. But pitting individuals against each other in a competition for scarce resources (such as bonuses) will destroy hivishness, trust, and morale.“

The theories above connect nicely with the Social Identity Theory of Leadership which shows how smart leaders can promote connectedness to improve team outcomes.

Can’t We All Disagree More Constructively?

We need the Yin and Yang of Conservatives and Liberals in order to make effective moral choices for society. Choices that are neither blind to the needs of the marginalised, nor blind to the value of moral capital which societies have built up over centuries. There is wisdom on both the left and right that we should understand and value:

Yin: Liberal Wisdom:

- Governments Can and Should Restrain Corporate Superorganisms:

It’s a common theme in sci-fi to see mega-corporations ruling the universe with governments powerless to retrain them. Keeping these super organisms morally accountable for their actions is necessary to some extent.

2. Some Problems Really Can Be Solved by Regulation:

Removing lead from petrol is a great example of a government intervention which has great outcomes for people from all political sides. Saving lives, IQ points, money, and moral capital. An example from Australia could be the success of our cigarette advertising and tax regulation in improving the wallets and health of Australians.

“When conservatives object that liberal efforts to intervene in markets or engage in “social engineering” always have unintended consequences, they should note that sometimes those consequences are positive”

Yang: Libertarian/ Social Conservative Wisdom

- Markets are Miraculous:

When businesses don’t perform efficiently they soon go bankrupt. Government departments, however, with no market competition, have little motivation to improve themselves. Competition drives prices down and creates opportunity and resources for millions. Interfering in the workings of markets can result in extraordinary harm on a vast scale.

2. You Don’t Help The Bees By Destroying the Hive:

Large-scale human societies are nearly miraculous achievements. As we have developed our morality over time we have done that development in groups. When liberals work to break down groups to create equality and fairness they sometimes do so at the expense of valuable things that can result in more harm to the marginalised not less.

“On issue after issue, it’s as though liberals are trying to help a subset of bees (which really does need help) even if doing so damages the hive. Such “reforms” may lower the overall welfare of a society, and sometimes they even hurt the very victims liberals were trying to help.“

Conclusion:

Partisanship is on the increase. We will only succeed if we lay down the arms of self-righteousness and take up empathy, and the willingness to have difficult moral conversations. It’s time to follow the white rabbit and to wake up from your moral matrix.